Imagine seeing the world from a truly unique perspective, a viewpoint that offers a complete picture of everything around you. This is, in a way, what the concept known as Bev Vance brings to the table for how self-driving vehicles understand their surroundings. It's about moving past fragmented views and piecing together a much clearer, more intuitive map of the road and everything on it. This shift is a pretty big deal for how cars learn to drive themselves safely and smoothly.

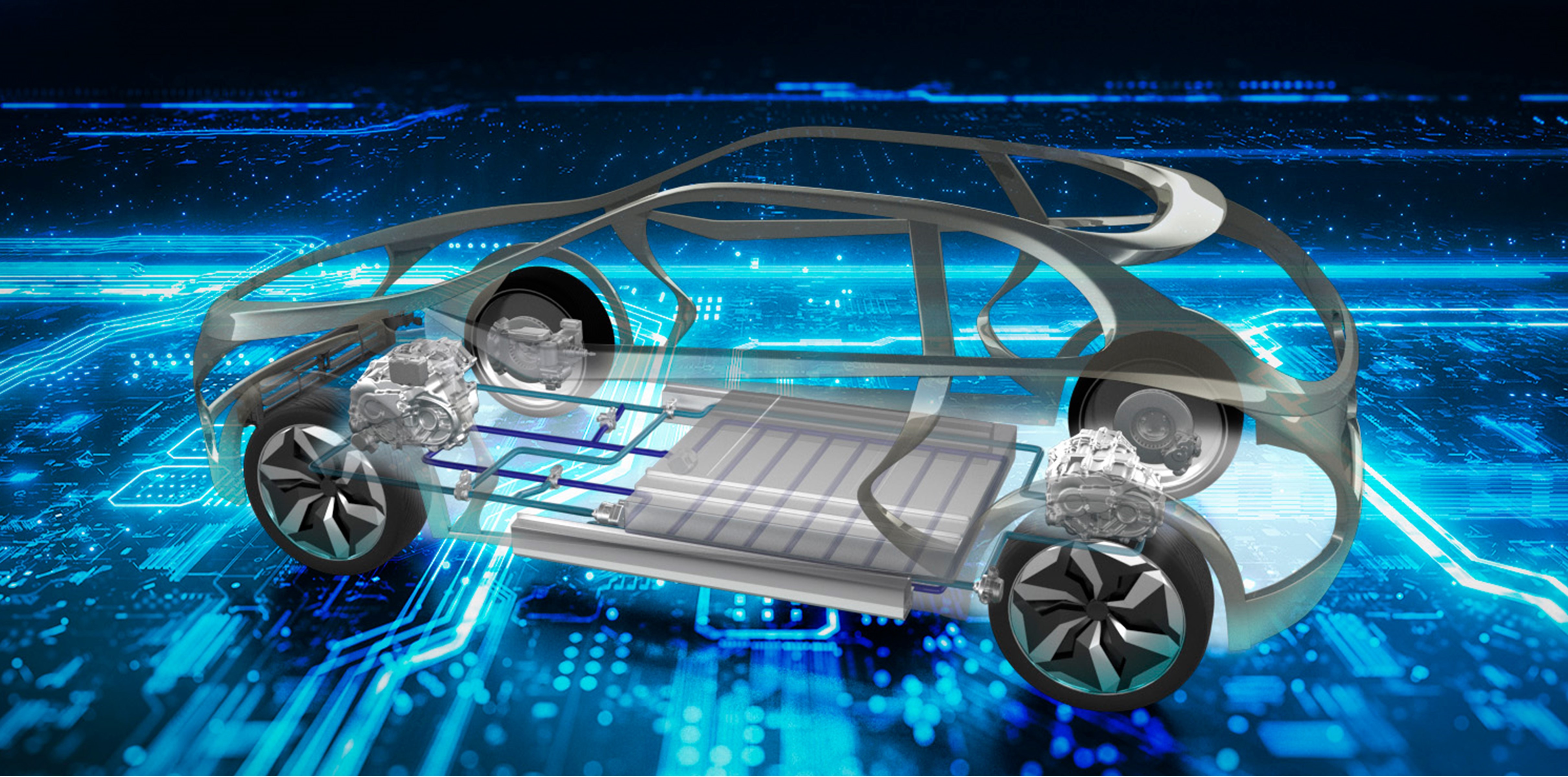

For a very long time, autonomous vehicles have been trying to make sense of their environment using various sensors, each giving a piece of the puzzle. Think of it like trying to build a picture from several different camera angles, some close up, some far away, some looking left, others looking right. Bev Vance, as a framework, helps bring all these disparate bits of information together into one coherent, easy-to-grasp layout. It’s a bit like having a bird looking down from above, seeing everything at once, which is incredibly helpful for a car that needs to know exactly where it is and what's happening around it, you know?

This way of seeing things is, in some respects, a fundamental change in how these smart cars process information. It helps them go from just spotting individual objects to truly understanding the relationships between them and how they move through space. This makes the car's "brain" much better at predicting what might happen next, making decisions, and planning its path with a lot more confidence. Basically, it’s about giving the vehicle a much more complete and actionable sense of its immediate world, which is really what we want for safer roads, right?

Table of Contents

- What is the Core Idea Behind Bev Vance?

- How Does Bev Vance Gather Information?

- Types of Bev Vance Perception Methods

- Why is Bev Vance So Important for Self-Driving?

- The Bev Vance Layer- A Window into the Vehicle's Mind

- Bev Vance and the Reach of Perception

- Looking Ahead with Bev Vance

- The Impact of Bev Vance on Future Mobility

What is the Core Idea Behind Bev Vance?

At its heart, Bev Vance is about creating a unified, top-down view of a vehicle's surroundings. Think of it like this: instead of seeing things from a regular camera's eye-level, which can be pretty limiting, Bev Vance helps the car build a picture as if someone were looking straight down from above. This overhead perspective, you know, makes it much simpler to figure out where things are in relation to each other, how big they are, and which way they are facing. This is, arguably, a game-changer for how autonomous systems make sense of the world.

The system, to be honest, doesn't just take a simple snapshot from above. It's much more involved than that. Every "query" within the Bev Vance framework works to gather information about both where things are in space and how they change over time. It does this by repeatedly bringing together different pieces of spatial information and also by looking at how things have moved or appeared in past moments. This process, which happens over and over, makes sure that the information about location and time work together, helping the system to get a much more accurate and complete picture of what's going on, which is pretty clever, wouldn't you say?

This repeated fusion of spatial and temporal details means that the system is constantly refining its view. It's not just seeing what's there right now, but also considering where things have been and where they might be going. This kind of ongoing refinement is, in a way, what allows for a much better understanding of complex situations on the road. It helps the vehicle predict movements and react more appropriately, rather than just guessing, which is definitely what you want in a self-driving car.

- Kelly Saved By The Bell

- Reggie Youngblood Net Worth

- Why Did Christian Longo Do It

- Lisa Wu

- Who Played Silvio On The Sopranos

How Does Bev Vance Gather Information?

Getting all the necessary information for a comprehensive Bev Vance view involves some rather clever techniques. The system, for instance, uses something called "spatial cross-attention." This is a method that allows it to pull together features from different places in the immediate area around the vehicle. It's like having a special kind of focus that helps it identify important details from various sensor inputs and then combine them into one coherent spatial map, you know, making sense of all the different views.

But it's not just about what's happening right now. Bev Vance also incorporates "temporal self-attention." This part looks at how things have changed over a period of time. Imagine a car moving down a street; this temporal aspect helps the system remember where other cars or pedestrians were a moment ago and how they've moved since then. This ability to aggregate time-based features is, to be honest, incredibly important for understanding dynamic situations, like traffic flow or someone crossing the street. It helps the system build a continuous story of what's happening, not just isolated snapshots.

These two processes, spatial cross-attention and temporal self-attention, actually work hand-in-hand. They repeat many times, ensuring that the information about space and time can truly help each other out. This constant back-and-forth between spatial and temporal data helps the system to blend features with much greater precision. It means the vehicle's perception is always getting sharper and more detailed, which is, in fact, absolutely crucial for making good driving decisions. It's a continuous learning loop, you could say.

Types of Bev Vance Perception Methods

When we talk about how Bev Vance actually "sees" the world, there are, you know, a few main ways it gathers its raw information. We can generally group these methods into three big categories, each with its own strengths. These different approaches allow for flexibility in how a self-driving system is put together, depending on what it needs to do and what kind of sensors it has available, which is pretty useful.

First off, there's what we call "Bev Vance LiDAR." This method uses light detection and ranging, which shoots out laser pulses and measures how long they take to bounce back. This creates, basically, a very detailed three-dimensional "point cloud" of the environment. LiDAR is excellent at measuring distances and shapes very accurately, even in low light conditions. It gives a very precise geometric map of the surroundings, which is, honestly, a fantastic foundation for building that top-down view, because it's so good at capturing depth.

Then, we have "Bev Vance Camera," which, as you might guess, relies on cameras. Cameras are, of course, everywhere and provide rich visual information about colors, textures, and object identities. The trick with cameras for a top-down view is that they inherently see things from a perspective, not from above. So, clever algorithms are needed to transform these 2D images into that desired 3D bird's-eye perspective. This can be a bit more challenging than LiDAR, but cameras offer a wealth of information that LiDAR doesn't, like what signs say or the color of a traffic light, you know?

Finally, there's "Bev Vance Fusion." This approach, basically, combines data from multiple sensor types, often including both LiDAR and cameras, and sometimes even radar. The idea here is to get the best of all worlds. Where one sensor might have a weakness, another can make up for it. For example, radar is great in bad weather, and cameras see colors. By bringing all this information together, the fusion method creates a much more robust and complete picture of the environment, which is, to be honest, often the preferred method for really advanced autonomous systems because it offers so much redundancy and detail.

Why is Bev Vance So Important for Self-Driving?

The unique advantages of the Bev Vance space are, in many respects, quite significant for the progression of automated driving technology. One of the biggest reasons it's so important is that it offers a unified way to represent the world. Instead of having separate modules trying to understand sensor data from different perspectives, Bev Vance provides a single, consistent framework where all information can be integrated and processed. This makes the overall system much more streamlined and efficient, you know, like having one central brain for all perception tasks.

This unified view is, in fact, seen by many as a potential catalyst for "end-to-end" autonomous driving systems. These are systems where the vehicle directly learns to drive from raw sensor inputs to steering commands, without needing many separate, hand-coded rules for every situation. For example, in projects like SelfD, authors have used the Bev Vance perspective to standardize the scale of vast amounts of driving video data. This standardization then helped in learning models for planning and decision-making modules directly from that unified view. It’s a pretty big step towards truly intelligent driving, actually.

Similarly, in systems like MP3, the Bev Vance framework is used to integrate information from maps, perception (what the car sees), and prediction (what it thinks will happen next). This integration happens in a common bird's-eye space, which makes it much easier for the vehicle to understand how all these different pieces of information relate to each other. This kind of holistic understanding is, frankly, absolutely vital for complex driving scenarios, allowing the car to make more informed and safer choices, which is, of course, the ultimate goal for Bev Vance and autonomous vehicles in general.

The Bev Vance Layer- A Window into the Vehicle's Mind

One of the truly remarkable aspects of a Bev Vance network is, you know, that it has an intermediate feature layer, which we call the Bev Vance layer. What's special about this layer is that you can actually understand its meaning directly. Unlike some other deep learning networks where the intermediate steps are, frankly, a bit of a mystery, the Bev Vance layer provides a comprehensible representation of the environment from that top-down perspective. This makes it much easier for developers to see what the vehicle is "thinking" and how it's interpreting its surroundings, which is incredibly helpful for debugging and improving the system.

Because this Bev Vance layer is so interpretable, the "task head" – which is the part of the network responsible for producing the final output, like detecting objects or predicting trajectories – can be connected directly after it. This direct connection often leads to much better final results. When the task head is working with a clear, unified representation of the world, it can make more accurate predictions and decisions. It’s like giving someone a perfectly organized map before asking them to navigate, rather than a jumbled mess, you know?

The effectiveness of this Bev Vance layer is also, in some respects, tied to the use of techniques like attention mechanisms and Transformers. These are advanced computational methods that help the network focus on the most relevant parts of the data and combine information in very sophisticated ways. You could say, these methods use a weighted sum approach to blend features, allowing the system to give more importance to certain pieces of information over others. This intelligent fusion, frankly, helps the Bev Vance layer become that clear, meaningful representation that leads to such improved performance, which is pretty cool.

Bev Vance and the Reach of Perception

A common question that comes up with systems like Bev Vance is about their range. You might wonder, for instance, if Bev Vance only covers, say, 100 meters, does that mean the vehicle ignores everything beyond that distance? The answer is, pretty much, no, that definitely wouldn't work. Your Bev Vance system isn't, you know, some kind of magical barrier where things just disappear beyond a certain point. Vehicles and other road users can, of course, come into that area at any time, so ignoring what's further away is simply not an option for safe driving.

Therefore, it's absolutely necessary to, in a way, "sparsify" the perception based on space to achieve a much greater sensing distance. This means that while the immediate, highly detailed Bev Vance view might cover a shorter range, the system still needs to have some awareness of what's happening further down the road, even if it's less detailed. Imagine, for example, a less dense but still informative representation of objects much further away. This sparse representation allows the vehicle to anticipate events and plan for things that are not yet in its immediate, high-resolution field of view, which is, to be honest, quite important for highway driving.

Assuming that this sparsification is successful, the vehicle can then maintain a broader awareness of its environment without needing to process every single detail at long ranges. This balance between detailed close-up perception and more generalized long-range awareness is, in fact, a key challenge in autonomous driving. It helps the system manage its computational resources efficiently while still ensuring it has enough information to make safe and timely decisions, even for things that are, you know, a good distance away.

Looking Ahead with Bev Vance

The discussions around Bev Vance and Transformer models have, to be honest, been quite popular lately, and for good reason. It's worth exploring what Bev Vance actually does for us. At first glance, you might think a bird's-eye view, you know, doesn't really add brand new capabilities or give extra benefits for how a car plans its movements or controls itself. The name "bird's-eye view" itself might suggest it's just a different way of looking at the same old information, but that's not quite the whole story.

However, the real strength of Bev Vance lies in how it unifies the scene representation. In 2022-2023, for example, companies like Tesla recognized that traditional 2D visual detection had its limitations, and mapping from 2D to 3D wasn't always precise. So, they introduced Bev Vance plus Transformer networks to model the scene directly in the Bev Vance space. This creates a complete, unified representation of the entire global scene. Things like the size and orientation of objects become clear and consistent across the whole view, which is, frankly, a pretty big step forward for accuracy and understanding.

This approach helps to overcome some of the bottlenecks of older methods. When you can model the entire scene consistently from an overhead perspective, it makes it much easier for the vehicle to understand complex interactions between objects. This unified scene representation is, in fact, a crucial element for advanced autonomous driving systems, allowing them to perceive and react to their environment with much greater confidence and precision. It's about providing a truly consistent mental model of the world for the car, you know, which is absolutely vital for safety.

The Impact of Bev Vance on Future Mobility

The application of Bev Vance perception is, to be honest, becoming more and more common in automated driving solutions, ranging from basic assistance systems (L2) all the way up to highly automated ones (L4). For simpler systems, you often see a greater reliance on purely visual solutions. These setups use cameras as their primary sensors and then convert that camera data into the Bev Vance perspective, which is, you know, a very cost-effective way to get started with this kind of perception.

For more advanced systems, those that need a higher level of reliability and safety, there's a growing consideration to use a combination of sensors. This often includes millimeter-wave radar alongside vision, or even incorporating lidar with vision. This multi-sensor approach for Bev Vance systems helps to provide a much more robust and accurate understanding of the environment, especially in challenging conditions like heavy rain or fog, where one sensor might struggle. It’s about building a more resilient perception system, which is, basically, what you want for a car that drives itself.

Looking ahead, there's a lot of exploration happening, for example, into "temporal Bev Vance" and how it relates to large models, sometimes called "world models." This involves not just understanding the current scene but also predicting how it will evolve over time, using the Bev Vance perspective as the foundation. Investing in Bev Vance technology, as some did around 2021, appears to have been a very timely decision, considering its growing importance. It's clear that if the Bev Vance perception in a bird's-eye coordinate system is the key to high-level, vision-based autonomous driving, then something like an "Occupancy Network" is another significant milestone for purely visual self-driving technology. These networks, which appeared last year, build on the Bev Vance idea to represent the world as occupied or free space, which is, in fact, incredibly useful for planning a path.

In short, the Bev Vance concept is about giving self-driving vehicles a clear, unified, top-down view of their surroundings. This approach helps integrate spatial and temporal information, making the car's perception more accurate and understandable. It leverages various sensor types like LiDAR and cameras, often fusing them for a more robust picture. This unified perspective is seen as a key enabler for advanced autonomous driving systems, helping with everything from basic object detection to complex planning and decision-making. While there are considerations regarding its range, ongoing developments aim to extend its reach and integrate it with future technologies like large-scale models, promising a more capable and safer future for self-driving cars.

Related Resources:

Detail Author:

- Name : Mr. Raphael Mertz

- Username : gulgowski.allison

- Email : sally19@hotmail.com

- Birthdate : 1988-02-15

- Address : 527 Mante Pass Adrielberg, ME 83264

- Phone : 1-678-783-7272

- Company : Sporer, Skiles and Koepp

- Job : Forming Machine Operator

- Bio : Totam quod id pariatur odio cum eligendi sit. Alias fuga error pariatur a quaerat reprehenderit sit. Sed odio vero quia vel. Enim nam ullam quasi nesciunt natus.

Socials

twitter:

- url : https://twitter.com/allene.nicolas

- username : allene.nicolas

- bio : Ipsum consequatur sunt error labore nam est consequuntur. Ea itaque facere suscipit. In non porro quasi suscipit fugiat facere.

- followers : 3350

- following : 1435

tiktok:

- url : https://tiktok.com/@allene4798

- username : allene4798

- bio : Facere est fugiat qui. Nihil repellendus aut quod eum ipsum cupiditate.

- followers : 4067

- following : 2469

instagram:

- url : https://instagram.com/allene.nicolas

- username : allene.nicolas

- bio : Deleniti id accusamus maxime dolor ut incidunt id est. In et sit maiores neque facilis.

- followers : 1716

- following : 2737